gstreamer_1">0 安装gstreamer

sudo apt install libgstreamer1.0-0 gstreamer1.0-plugins-base gstreamer1.0-plugins-good gstreamer1.0-plugins-bad gstreamer1.0-plugins-ugly gstreamer1.0-libav gstreamer1.0-doc gstreamer1.0-tools gstreamer1.0-x gstreamer1.0-alsa gstreamer1.0-gl gstreamer1.0-gtk3 gstreamer1.0-qt5 gstreamer1.0-pulseaudio

0.1 查看所有插件

gst-inspect-1.0 -a

0.2安装server

注意一定要加上dev

sudo apt install libgstreamer1.0-dev libgstrtspserver-1.0-dev

git clone https://github.com/GStreamer/gst-rtsp-server.git

cd gst-rtsp-server/

git checkout 1.18

cd examples/

gcc test-launch.c -o test-launch $(pkg-config --cflags --libs gstreamer-rtsp-server-1.0)

0.3查看库装在了何处

ldconfig -p | grep libgstrtspserver

0.4查看某个摄像头

gst-launch-1.0 v4l2src device=/dev/video0 ! video/x-raw, format=NV12, width=640, height=480, framerate=30/1 ! autovideosink

1 基础测试视频

#include <gst/gst.h>

#include <gst/rtsp-server/rtsp-server.h>

int main(int argc, char *argv[]) {

// 初始化GStreamer

gst_init(&argc, &argv);

// 创建RTSP服务器实例

GstRTSPServer *server = gst_rtsp_server_new();

// 设置服务器监听端口

gst_rtsp_server_set_service(server, "8554");

// 创建媒体映射与工厂

GstRTSPMountPoints *mounts = gst_rtsp_server_get_mount_points(server);

GstRTSPMediaFactory *factory = gst_rtsp_media_factory_new();

// 创建GStreamer管道

gst_rtsp_media_factory_set_launch(factory,

"( videotestsrc ! video/x-raw,format=(string)I420,width=640,height=480,framerate=(fraction)30/1 ! x264enc ! rtph264pay name=pay0 pt=96 )");

// 将媒体工厂添加到媒体映射

gst_rtsp_mount_points_add_factory(mounts, "/test", factory);

g_object_unref(mounts);

// 启动RTSP服务器

gst_rtsp_server_attach(server, NULL);

// 进入主循环

GMainLoop *loop = g_main_loop_new(NULL, FALSE);

g_main_loop_run(loop);

// 清理资源

g_main_loop_unref(loop);

g_object_unref(server);

return 0;

}

1.1 makefile

cmake_minimum_required(VERSION 3.0)

project(photo_get_project)

set(CMAKE_CXX_STANDARD 11)

#set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -pthread -O3 -DNDEBUG")

SET(CMAKE_CXX_FLAGS_DEBUG "$ENV{CXXFLAGS} -Wall -g -ggdb")

SET(CMAKE_CXX_FLAGS_RELEASE "$ENV{CXXFLAGS} -O3 -Wall -DNDEBUG")

set(CMAKE_C_FLAGS_RELEASE "$ENV{CXXFLAGS} -O3 -Wall -DNDEBUG")

set(EXECUTABLE_OUTPUT_PATH ${CMAKE_SOURCE_DIR}/bin)

# 设置代码构建级别为 Debug 方式 Debug Release

#set(CMAKE_BUILD_TYPE Release)

message(STATUS "yangshao: ${CMAKE_CXX_FLAGS}" )

message(STATUS "OpenCV Libraries: ${OpenCV_LIBS}")

find_package(OpenCV REQUIRED)

include_directories(/usr/local/opencv4.8/include/opencv4)

include_directories(/usr/include/gstreamer-1.0)

include_directories(/usr/include/glib-2.0)

include_directories(/usr/lib/aarch64-linux-gnu/glib-2.0/include/)

add_executable(test rtspserver.cpp)

target_link_libraries(test PRIVATE -lgobject-2.0 glib-2.0 gstreamer-1.0 gstapp-1.0 gstrtspserver-1.0)

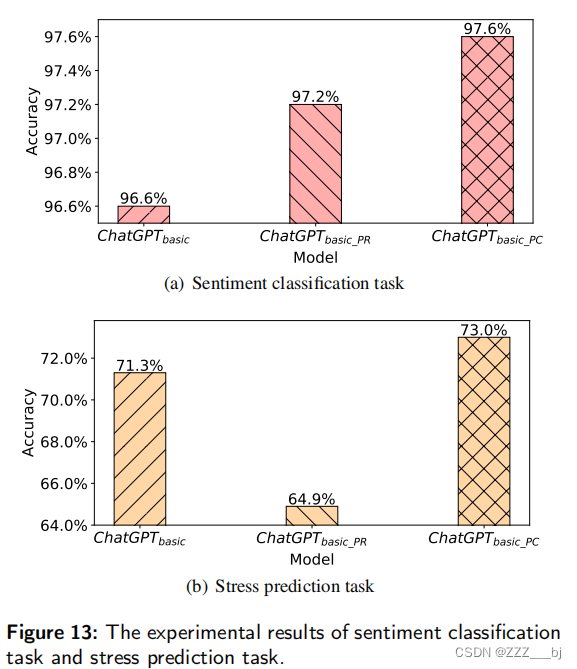

1.2 结果

打开两个vlc测试

2 发送到rtspserver

#include <gst/gst.h>

#include <gst/app/gstappsrc.h>

#include <opencv2/opencv.hpp>

class VideoSource {

public:

VideoSource(const char* pipeline_str) {

pipeline = gst_parse_launch(pipeline_str, NULL);

appsrc = GST_APP_SRC(gst_bin_get_by_name(GST_BIN(pipeline), "appsrc0"));

// 设置appsrc属性

g_object_set(appsrc, "is-live", TRUE, "format", GST_FORMAT_TIME, NULL);

// 连接信号

g_signal_connect(appsrc, "need-data", G_CALLBACK(OnNeedData), this);

g_signal_connect(appsrc, "enough-data", G_CALLBACK(OnEnoughData), this);

}

~VideoSource() {

gst_object_unref(pipeline);

}

void Start() {

gst_element_set_state(pipeline, GST_STATE_PLAYING);

}

void Stop() {

gst_element_set_state(pipeline, GST_STATE_NULL);

}

private:

static gboolean OnNeedData(GstElement *element, guint unused_size, gpointer user_data) {

VideoSource *self = static_cast<VideoSource*>(user_data);

return self->PushFrame();

}

static gboolean OnEnoughData(GstElement *element, gpointer user_data) {

VideoSource *self = static_cast<VideoSource*>(user_data);

self->Stop();

return TRUE;

}

gboolean PushFrame() {

// 从cv::Mat中获取帧

cv::Mat frame = GetNextFrame();

// 将cv::Mat转换为GstBuffer

GstBuffer *buffer = ConvertMatToGstBuffer(frame);

// 将GstBuffer推送到appsrc

gst_app_src_push_buffer(GST_APP_SRC(appsrc), buffer);

// 如果没有更多的帧,停止管道

if (!HasMoreFrames()) {

Stop();

return FALSE;

}

return TRUE;

}

cv::Mat GetNextFrame() {

// 在这里实现获取下一帧的逻辑

// 例如,从文件、网络流或摄像头读取

// 返回一个cv::Mat对象

}

gboolean HasMoreFrames() {

// 实现检查是否还有更多帧的逻辑

// 如果没有更多帧,返回FALSE,否则返回TRUE

}

GstBuffer *ConvertMatToGstBuffer(cv::Mat &frame) {

// 将cv::Mat转换为GstBuffer

// 注意:这只是一个示例实现,你可能需要根据你的帧格式进行调整

GstBuffer *buffer = gst_buffer_new_wrapped(frame.data, frame.total() * frame.elemSize(), 0, frame.total() * frame.elemSize());

GstVideoInfo info;

gst_video_info_set_format(&info, GST_VIDEO_FORMAT_RGBA, frame.cols, frame.rows, frame.step);

gst_buffer_add_video_meta(buffer, &info, 0, frame.cols * frame.rows * frame.channels());

return buffer;

}

GstElement *pipeline;

GstElement *appsrc;

};

int main(int argc, char *argv[]) {

gst_init(&argc, &argv);

// RTSP Server的GStreamer管道描述

const char* pipeline_str =

"appsrc name=appsrc0 is-live=true format=time "

"! videoconvert "

"! x264enc tune=zerolatency bitrate=500 speed-preset=superfast "

"! rtph264pay config-interval=1 name=pay0 pt=96 "

"! rtspclientsink location=\"rtsp://localhost:8554/test\"";

VideoSource source(pipeline_str);

source.Start();

// 主循环

GMainLoop *loop = g_main_loop_new(NULL, FALSE);

g_main_loop_run(loop);

g_main_loop_unref(loop);

return 0;

}

3 需要cv::mat 作为输入源

#include <gst/gst.h>

#include <gst/app/gstappsrc.h>

#include <opencv2/opencv.hpp>

class VideoSource {

public:

VideoSource(const char* pipeline_str) {

pipeline = gst_parse_launch(pipeline_str, NULL);

appsrc = GST_APP_SRC(gst_bin_get_by_name(GST_BIN(pipeline), "appsrc0"));

// 设置appsrc属性

g_object_set(appsrc, "is-live", TRUE, "format", GST_FORMAT_TIME, NULL);

// 连接信号

g_signal_connect(appsrc, "need-data", G_CALLBACK(OnNeedData), this);

g_signal_connect(appsrc, "enough-data", G_CALLBACK(OnEnoughData), this);

}

~VideoSource() {

gst_object_unref(pipeline);

}

void Start() {

gst_element_set_state(pipeline, GST_STATE_PLAYING);

}

void Stop() {

gst_element_set_state(pipeline, GST_STATE_NULL);

}

private:

static gboolean OnNeedData(GstElement *element, guint unused_size, gpointer user_data) {

VideoSource *self = static_cast<VideoSource*>(user_data);

return self->PushFrame();

}

static gboolean OnEnoughData(GstElement *element, gpointer user_data) {

VideoSource *self = static_cast<VideoSource*>(user_data);

self->Stop();

return TRUE;

}

gboolean PushFrame() {

// 从cv::Mat中获取帧

cv::Mat frame = GetNextFrame();

// 将cv::Mat转换为GstBuffer

GstBuffer *buffer = ConvertMatToGstBuffer(frame);

// 将GstBuffer推送到appsrc

gst_app_src_push_buffer(GST_APP_SRC(appsrc), buffer);

// 如果没有更多的帧,停止管道

if (!HasMoreFrames()) {

Stop();

return FALSE;

}

return TRUE;

}

cv::Mat GetNextFrame() {

// 在这里实现获取下一帧的逻辑

// 例如,从文件、网络流或摄像头读取

// 返回一个cv::Mat对象

}

gboolean HasMoreFrames() {

// 实现检查是否还有更多帧的逻辑

// 如果没有更多帧,返回FALSE,否则返回TRUE

}

GstBuffer *ConvertMatToGstBuffer(cv::Mat &frame) {

// 将cv::Mat转换为GstBuffer

// 注意:这只是一个示例实现,你可能需要根据你的帧格式进行调整

GstBuffer *buffer = gst_buffer_new_wrapped(frame.data, frame.total() * frame.elemSize(), 0, frame.total() * frame.elemSize());

GstVideoInfo info;

gst_video_info_set_format(&info, GST_VIDEO_FORMAT_RGBA, frame.cols, frame.rows, frame.step);

gst_buffer_add_video_meta(buffer, &info, 0, frame.cols * frame.rows * frame.channels());

return buffer;

}

GstElement *pipeline;

GstElement *appsrc;

};

int main(int argc, char *argv[]) {

gst_init(&argc, &argv);

// RTSP Server的GStreamer管道描述

const char* pipeline_str =

"appsrc name=appsrc0 is-live=true format=time "

"! videoconvert "

"! x264enc tune=zerolatency bitrate=500 speed-preset=superfast "

"! rtph264pay config-interval=1 name=pay0 pt=96 "

"! rtspclientsink location=\"rtsp://localhost:8554/test\"";

VideoSource source(pipeline_str);

source.Start();

// 主循环

GMainLoop *loop = g_main_loop_new(NULL, FALSE);

g_main_loop_run(loop);

g_main_loop_unref(loop);

return 0;

}

4 自身需要作为rtspserver

#include <gst/gst.h>

#include <gst/app/gstappsrc.h>

#include <gst/rtsp-server/gst-rtsp-server.h>

#include <opencv2/opencv.hpp>

class RtspsServerApp {

public:

RtspsServerApp() {

gst_init(NULL, NULL);

pipeline = gst_pipeline_new("pipeline");

appsrc = gst_element_factory_make("appsrc", "appsrc0");

g_object_set(appsrc, "is-live", TRUE, "format", GST_FORMAT_TIME, NULL);

g_signal_connect(appsrc, "need-data", G_CALLBACK(OnNeedData), this);

g_signal_connect(appsrc, "enough-data", G_CALLBACK(OnEnoughData), this);

videoconvert = gst_element_factory_make("videoconvert", "videoconvert");

x264enc = gst_element_factory_make("x264enc", "x264enc");

rtph264pay = gst_element_factory_make("rtph264pay", "rtph264pay");

queue = gst_element_factory_make("queue", "queue");

gst_bin_add_many(GST_BIN(pipeline), appsrc, videoconvert, x264enc, rtph264pay, queue, NULL);

gst_element_link_many(appsrc, videoconvert, x264enc, rtph264pay, queue, NULL);

// Create the RTSP server and mount points

server = gst_rtsp_server_new();

mounts = gst_rtsp_server_get_mount_points(server);

factory = gst_rtsp_media_factory_new();

g_object_set(factory, "media-type", "video", NULL);

g_object_set(factory, "caps", gst_pad_get_pad_template_caps(GST_ELEMENT(queue)->sinkpad), NULL);

// Set up the launch string for the factory

gchar *launch = g_strdup_printf("( appsrc name=source ! videoconvert ! x264enc ! rtph264pay name=pay0 pt=96 )");

gst_rtsp_media_factory_set_launch(factory, launch);

g_free(launch);

gst_rtsp_mount_points_add_factory(mounts, "/stream", factory);

// Attach the server

gst_rtsp_server_attach(server, NULL);

}

~RtspsServerApp() {

gst_rtsp_server_detach(server);

gst_object_unref(mounts);

gst_object_unref(factory);

gst_object_unref(server);

gst_object_unref(pipeline);

}

void Start() {

gst_element_set_state(pipeline, GST_STATE_PLAYING);

}

void Stop() {

gst_element_set_state(pipeline, GST_STATE_NULL);

}

private:

static gboolean OnNeedData(GstElement *element, guint unused_size, gpointer user_data) {

RtspsServerApp *self = static_cast<RtspsServerApp*>(user_data);

return self->PushFrame();

}

static gboolean OnEnoughData(GstElement *element, gpointer user_data) {

RtspsServerApp *self = static_cast<RtspsServerApp*>(user_data);

self->Stop();

return TRUE;

}

gboolean PushFrame() {

// 假设这里你已经有了一个cv::Mat对象

cv::Mat frame = GetNextFrame();

// 将cv::Mat转换为GstBuffer

GstBuffer *buffer = ConvertMatToGstBuffer(frame);

// 将GstBuffer推送到appsrc

gst_app_src_push_buffer(GST_APP_SRC(appsrc), buffer);

return TRUE;

}

cv::Mat GetNextFrame() {

// 实现获取下一帧的逻辑

// 返回一个cv::Mat对象

}

GstBuffer *ConvertMatToGstBuffer(cv::Mat &frame) {

// 将cv::Mat转换为GstBuffer

// 注意:这只是一个示例实现,你可能需要根据你的帧格式进行调整

GstBuffer *buffer = gst_buffer_new_wrapped(frame.data, frame.total() * frame.elemSize(), 0, frame.total() * frame.elemSize());

GstVideoInfo info;

gst_video_info_set_format(&info, GST_VIDEO_FORMAT_I420, frame.cols, frame.rows, frame.step);

gst_buffer_add_video_meta(buffer, &info, 0, frame.cols * frame.rows * frame.channels());

return buffer;

}

GstElement *pipeline;

GstElement *appsrc;

GstElement *videoconvert;

GstElement *x264enc;

GstElement *rtph264pay;

GstElement *queue;

GstRTSPServer *server;

GstRTSPMountPoints *mounts;

GstRTSPMediaFactory *factory;

};

int main(int argc, char *argv[]) {

RtspsServerApp app;

app.Start();

// 主循环

GMainLoop *loop = g_main_loop_new(NULL, FALSE);

g_main_loop_run(loop);

g_main_loop_unref(loop);

return 0;

}

![[前端架构]微前端原理了解](/images/no-images.jpg)